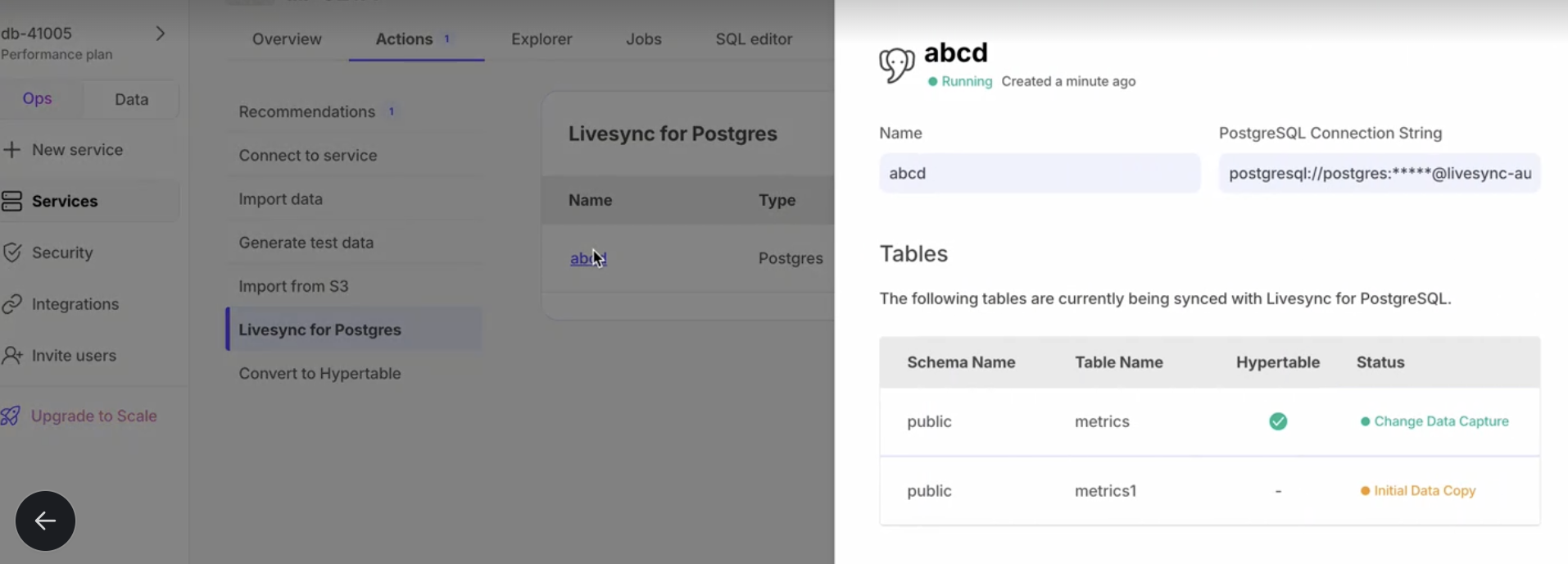

You use Livesync to synchronize all the data, or specific tables, from a PostgreSQL database instance to your Timescale Cloud service in real-time. You run Livesync continuously, turning PostgreSQL into a primary database with your Timescale Cloud service as a logical replica. This enables you to leverage Timescale Cloud’s real-time analytics capabilities on your replica data.

Livesync leverages the a well-established PostgreSQL logical replication protocol. By relying on this protocol, Livesync ensures compatibility, familiarity, and a broader knowledge base. Making it easier for you to adopt Livesync and integrate your data.

You use Livesync for data synchronization, rather than migration. Livesync can:

Copy existing data from a PostgreSQL instance to a Timescale Cloud service:

Copy data at up to 150 GB/hr.

You need at least a 4 CPU/16GB source database, and a 4 CPU/16GB target service.

Copy the publication tables in parallel.

Large tables are still copied using a single connection. Parallel copying is in the backlog.

Forget foreign key relationships.

Livesync disables foreign key validation during the sync. For example, if a

metricstable refers to theidcolumn on thetagstable, you can still sync only themetricstable without worrying about their foreign key relationships.Track progress. PostgreSQL expose

COPYprogress under inpg_stat_progress_copy.

Synchronize real-time changes from a PostgreSQL instance to a Timescale Cloud service.

Add and remove tables on demand using the PostgreSQL PUBLICATION interface

.

Enable features such as hypertables, columnstore, and continuous aggregates on your logical replica.

Keywords

Found an issue on this page?Report an issue or Edit this page

in GitHub.