This document provides detailed step-by-step instructions to migrate data using the dual-write and backfill migration method from a source database which is not using PostgreSQL to Timescale.

Note

In the context of migrations, your existing production database is referred to as the "source" database, while the new Timescale database that you intend to migrate your data to is referred to as the "target" database.

In detail, the migration process consists of the following steps:

- Set up a target database instance in Timescale.

- Modify the application to write to a secondary database.

- Set up schema and migrate relational data to target database.

- Start the application in dual-write mode.

- Determine the completion point

T. - Backfill time-series data from source to target.

- Enable background jobs (policies) in the target database.

- Validate that all data is present in target database.

- Validate that target database can handle production load.

- Switch application to treat target database as primary (potentially continuing to write into source database, as a backup).

Tip

If you get stuck, you can get help by either opening a support request, or take

your issue to the #migration channel in the community slack,

where the developers of this migration method are there to help.

You can open a support request directly from the Timescale console, or by email to support@timescale.com.

Create a database service in Timescale.

If you intend on migrating more than 400 GB, open a support request to ensure that enough disk is pre-provisioned on your Timescale instance.

You can open a support request directly from the Timescale console, or by email to support@timescale.com.

How exactly to do this is dependent on the language that your application is written in, and on how exactly your ingestion and application function. In the simplest case, you simply execute two inserts in parallel. In the general case, you must think about how to handle the failure to write to either the source or target database, and what mechanism you want to or can build to recover from such a failure.

Should your time-series data have foreign-key references into a plain table,

you must ensure that your application correctly maintains the foreign key

relations. If the referenced column is a *SERIAL type, the same row inserted

into the source and target may not obtain the same autogenerated id. If this

happens, the data backfilled from the source to the target is internally

inconsistent. In the best case it causes a foreign key violation, in the worst

case, the foreign key constraint is maintained, but the data references the

wrong foreign key. To avoid these issues, best practice is to follow

live migration.

You may also want to execute the same read queries on the source and target database to evaluate the correctness and performance of the results which the queries deliver. Bear in mind that the target database spends a certain amount of time without all data being present, so you should expect that the results are not the same for some period (potentially a number of days).

Describing exactly how to migrate your data from every possible source is not feasible, instead we tell you what needs to be done, and hope that you find resources to support you.

In this step, you need to prepare the database to receive time-series data which is dual-written from your application. If you're migrating from another time-series database then you only need to worry about setting up the schema for the hypertables which will contain time-series data. For some background on what hypertables are, consult the tables and hypertables section of the getting started guide.

If you're migrating from a relational database containing both relational and time-series data, you also need to set up the schema for the relational data, and copy it over in this step, excluding any of the time-series data. The time-series data is backfilled in a subsequent step.

Our assumption in the dual-write and backfill scenario is that the volume of relational data is either very small in relation to the time-series data, so that it is not problematic to briefly stop your production application while you copy the relational data, or that it changes infrequently, so you can get a snapshot of the relational metadata without stopping your application. If this is not the case for your application, you should reconsider using the dual-write and backfill method.

Note

If you're planning on experimenting with continuous aggregates, we recommend that you first complete the dual-write and backfill migration, and only then create continuous aggregates on the data. If you create continuous aggregates on a hypertable before backfilling data into it, you must refresh the continuous aggregate over the whole time range to ensure that there are no holes in the aggregated data.

With the target database set up, your application can now be started in dual-write mode.

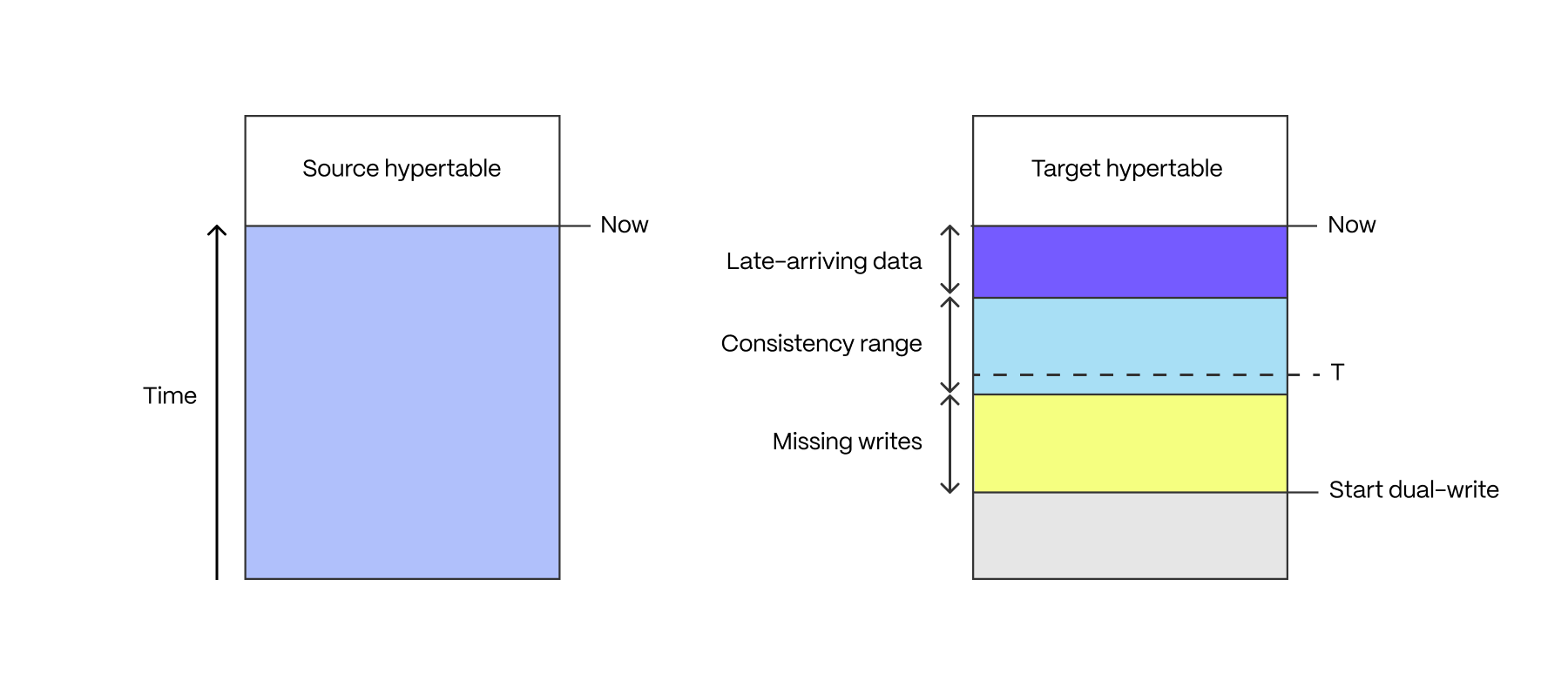

After dual-writes have been executing for a while, the target hypertable contains data in three time ranges: missing writes, late-arriving data, and the "consistency" range

If the application is made up of multiple writers, and these writers did not all simultaneously start writing into the target hypertable, there is a period of time in which not all writes have made it into the target hypertable. This period starts when the first writer begins dual-writing, and ends when the last writer begins dual-writing.

Some applications have late-arriving data: measurements which have a timestamp in the past, but which weren't written yet (for example from devices which had intermittent connectivity issues). The window of late-arriving data is between the present moment, and the maximum lateness.

The consistency range is the range in which there are no missing writes, and in which all data has arrived, that is between the end of the missing writes range and the beginning of the late-arriving data range.

The length of these ranges is defined by the properties of the application, there is no one-size-fits-all way to determine what they are.

The completion point T is an arbitrarily chosen time in the consistency range.

It is the point in time to which data can safely be backfilled, ensuring that

there is no data loss.

The completion point should be expressed as the type of the time column of

the hypertables to be backfilled. For instance, if you're using a TIMESTAMPTZ

time column, then the completion point may be 2023-08-10T12:00:00.00Z. If

you're using a BIGINT column it may be 1695036737000.

If you are using a mix of types for the time columns of your hypertables, you

must determine the completion point for each type individually, and backfill

each set of hypertables with the same type independently from those of other

types.

Dump the data from your source database on a per-table basis into CSV format,

and restore those CSVs into the target database using the

timescaledb-parallel-copy tool.

Determine the window of data that to be copied from the source database to the

target. Depending on the volume of data in the source table, it may be sensible

to split the source table into multiple chunks of data to move independently.

In the following steps, this time range is called <start> and <end>.

Usually the time column is of type timestamp with time zone, so the values

of <start> and <end> must be something like 2023-08-01T00:00:00Z. If the

time column is not a timestamp with time zone then the values of <start>

and <end> must be the correct type for the column.

If you intend to copy all historic data from the source table, then the value

of <start> can be '-infinity', and the <end> value is the value of the

completion point T that you determined.

The dual-write process may have already written data into the target database

in the time range that you want to move. In this case, the dual-written data

must be removed. This can be achieved with a DELETE statement, as follows:

psql $TARGET -c "DELETE FROM <hypertable> WHERE time >= <start> AND time < <end>);"

Important

The BETWEEN operator is inclusive of both the start and end ranges, so it is not recommended to use it.

Compression policies must be turned off for the target hypertable while data is being backfilled. This prevents the compression policy from compressing chunks which are only half full.

In the following command, replace <hypertable> with the fully qualified table

name of the target hypertable, for example public.metrics:

psql -d $TARGET -f -v hypertable=<hypertable> - <<'EOF'SELECT public.alter_job(j.id, scheduled=>false)FROM _timescaledb_config.bgw_job jJOIN _timescaledb_catalog.hypertable h ON h.id = j.hypertable_idWHERE j.proc_schema IN ('_timescaledb_internal', '_timescaledb_functions')AND j.proc_name = 'policy_compression'AND j.id >= 1000AND format('%I.%I', h.schema_name, h.table_name)::text::regclass = :'hypertable'::text::regclass;EOF

Refer to the documentation for your source database in order to determine how to dump a table into a CSV. You must ensure the CSV contains only data before the completion point. You should apply this filter when dumping the data from the source database.

You can load a CSV file into a hypertable using timescaledb-parallel-copy as

follows. Set the number of workers equal to the number of CPU cores in your

target database:

timescaledb-parallel-copy \--connection $TARGET \--table <target_hypertable> \--workers 8 \--file <table dump for source table>

The above command is not transactional. If there is a connection issue, or some other issue which causes it to stop copying, the partially copied rows must be removed from the target (using the instructions in step 6b above), and then the copy can be restarted.

In the following command, replace <hypertable> with the fully qualified table

name of the target hypertable, for example public.metrics:

psql -d $TARGET -f -v hypertable=<hypertable> - <<'EOF'SELECT public.alter_job(j.id, scheduled=>true)FROM _timescaledb_config.bgw_job jJOIN _timescaledb_catalog.hypertable h ON h.id = j.hypertable_idWHERE j.proc_schema IN ('_timescaledb_internal', '_timescaledb_functions')AND j.proc_name = 'policy_compression'AND j.id >= 1000AND format('%I.%I', h.schema_name, h.table_name)::text::regclass = :'hypertable'::text::regclass;EOF

Now that all data has been backfilled, and the application is writing data to both databases, the contents of both databases should be the same. How exactly this should best be validated is dependent on your application.

If you are reading from both databases in parallel for every production query, you could consider adding an application-level validation that both databases are returning the same data.

Another option is to compare the number of rows in the source and target tables, although this reads all data in the table which may have an impact on your production workload.

Now that dual-writes have been in place for a while, the target database should be holding up to production write traffic. Now would be the right time to determine if the target database can serve all production traffic (both reads and writes). How exactly this is done is application-specific and up to you to determine.

Once you've validated that all the data is present, and that the target database can handle the production workload, the final step is to switch to the target database as your primary. You may want to continue writing to the source database for a period, until you are certain that the target database is holding up to all production traffic.

Keywords

Found an issue on this page?Report an issue or Edit this page in GitHub.